A Comparative Study of MLOps and LLMOps

Author: Ammar Malik

02 August, 2024

As the field of AI continues to develop at a tremendous rate, 2 interconnected fields have emerged regarding operation management of these technologies. Machine Learning Operations and Large Language Model Operations. MLOps focuses on the end-to-end lifecycle management of machine learning models, this ranges from data preparation, model training, model deployment, and monitoring. LLMOps is similar in this regard and can be considered a more specialized subset of MLOps. Though they share common principles and practices, LLMOps has its own set of complexities and considerations. From managing hardware resources to train and run LLM models to addressing issues like hallucination, bias, and safety. In this blog post, we’ll explore the key differences between MLOps and LLMOps, their respective challenges, and the emerging best practices for effectively operationalizing both traditional machine learning models and state-of-the-art language models.

Understanding MLOps

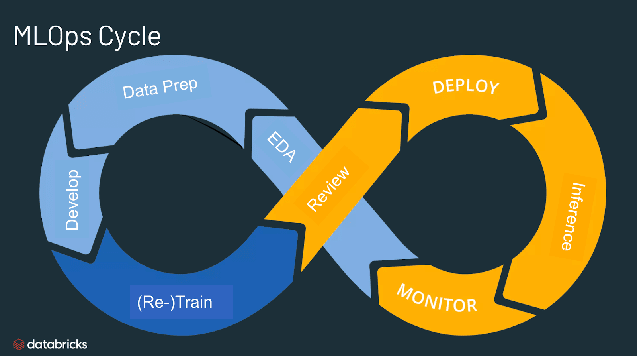

As mentioned earlier, Machine Learning Operations is a set of practices and principles which aim to optimize and streamline the end-to-end lifecycle of ML models. At its core this process is meant to bridge the gap between development and deployment whilst ensuring they are reliable, scalable, and continuously improving. Some key principles include collaboration between team members consisting of data scientists, engineers, and stakeholders. It also includes the automation of repetitive tasks, continuous integration, and delivery. As organizations rely on ML models to drive critical business decisions and processes, the need for robust or efficient model management becomes paramount. Some common MLOps tools include data management processes, this includes data collection, preprocessing, and versioning of the training data. Model training involves ML algorithms, hyperparameter tuning, and distributed computing hardware. Deployment tools include containerization, orchestration, and serving models in production ready environments. Monitoring is also an essential part for checking model performance, detecting data drift, and triggering retraining or updates as needed.

Definition and Scope of LLMOps

LLMOps is an emerging discipline that focuses on the operations and lifecycle management of Large Language Models. These models engage in natural language interactions and present unique challenges which require even more specialized practices and tools. One of the biggest challenges of LLMOps is actually on the hardware side, these models have billions of parameters and need vast number of resources to continue running. This requires specialized hardware like Graphical Processing Units or Tensor Processing Units and a distributed computing infrastructure.

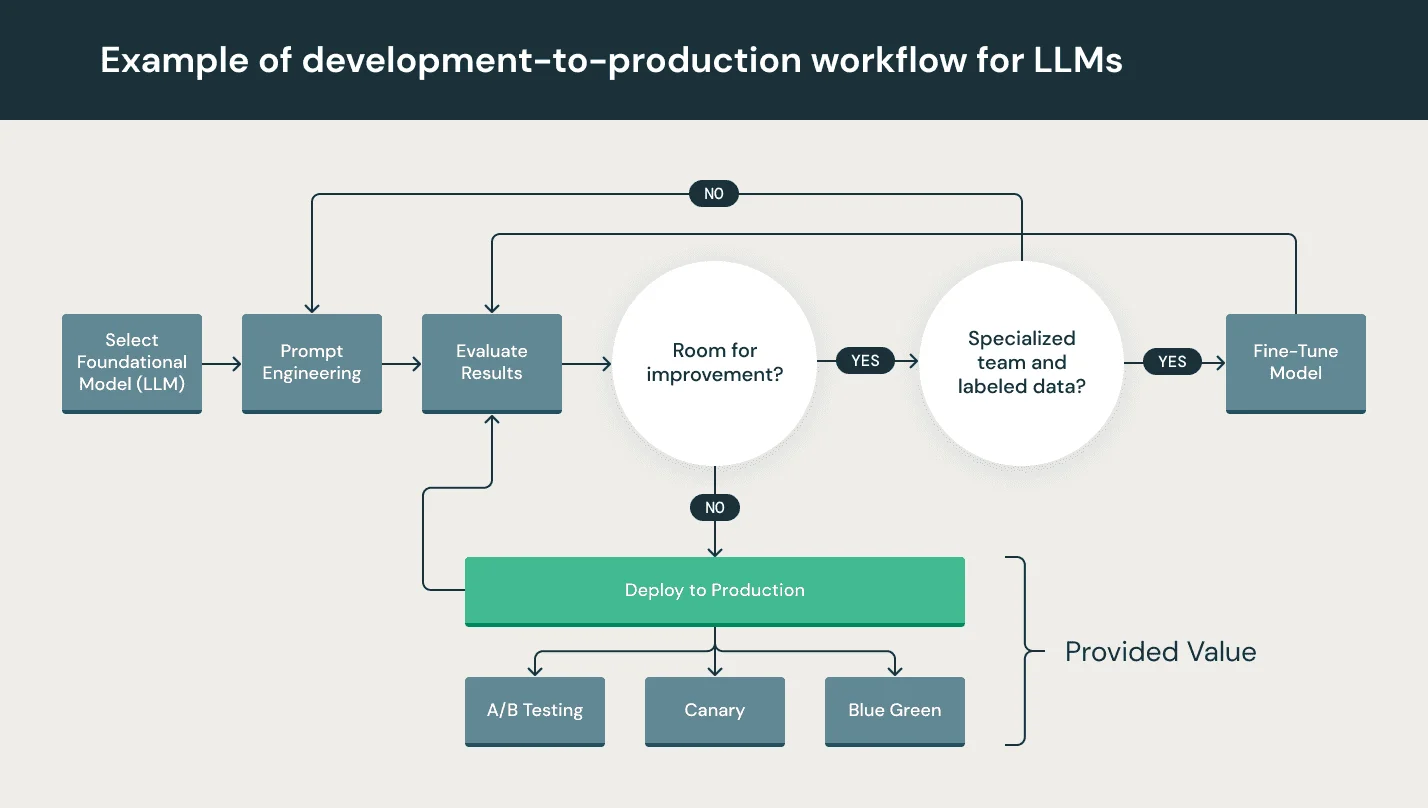

Managing these resources in a cost-effective way is an essential part of LLMOps as it would affect performance and scalability. LLM’s are very sensitive to the quality and diversity of data as it can potentially “learn” any bias. These biases and inconsistencies in the data can significantly impact the model’s behavior. As such processes like data curation, cleaning and preprocessing is rigorously done to ensure the robustness of the training data. After training, model evaluation and testing are done. It needs new metrics to evaluate its performance on things like coherence, fluency and safety. Techniques like human evaluation, adversarial testing, and prompting strategies are often employed to assess the performance and behavior of LLMs in various contexts. As LLMs become more prevalent and influential, addressing issues of bias, safety, and ethical concerns becomes paramount. LLMOps must incorporate practices and safeguards to mitigate the risk of generating harmful, biased, or misleading content. This may involve techniques like bias detection and mitigation, content filtering, and the implementation of ethical guidelines and principles. Additionally, LLMOps must consider the broader societal implications of LLM deployments and prioritize responsible AI practices.

Differences in MLOps and LLMOps

One of the key differences has to do with scalability requirements as traditional machine learning models while computationally intensive have smaller parameters compared to LLM’s. These ML models can be deployed on modest hardware, but LLM are massive compared to this. In order to run LLM’s, running those billions of parameters requires vast amounts of computational power for training and inference. With these intricate architectures and numerous layers, making them more challenging to interpret, debug, and optimize. LLMOps must employ specialized techniques and tools to handle this complexity, such as model compression, quantization, and efficient inference strategies. The evaluation and testing methodologies for LLMs differ substantially from those used in traditional machine learning models. While MLOps relies on metrics like accuracy, precision, and recall, LLMOps requires more nuanced evaluation techniques that capture the open-ended nature of language generation. This may involve human evaluation, adversarial testing, and the use of specialized metrics like perplexity, coherence, and fluency.

As LLMs become increasingly powerful and influential, responsible AI and ethical considerations take on heightened importance in LLMOps. These models have the potential to generate harmful or biased content, perpetuate stereotypes, and even influence public opinion and decision-making. LLMOps must prioritize practices that mitigate these risks, such as bias detection and mitigation, content filtering, and the implementation of ethical guidelines and principles. Additionally, LLMOps must consider the broader societal implications of LLM deployments and strive to uphold values of transparency, accountability, and responsible AI.

Conclusion

As the field of artificial intelligence continues to evolve at a rapid pace, the disciplines of MLOps and LLMOps have emerged as critical components in harnessing the power of machine learning and large language models, respectively. While MLOps and LLMOps share some common principles and practices, the unique challenges and complexities associated with operationalizing LLMs necessitate specialized approaches and considerations.

From managing the immense computational resources and data requirements to addressing issues of bias, safety, and ethical concerns, LLMOps is a rapidly evolving field that demands rigorous practices and a deep understanding of the nuances of these powerful language models. As LLMs become increasingly prevalent in various industries and applications, the importance of LLMOps will only continue to grow.

Ultimately, the success of both MLOps and LLMOps hinges on collaboration, knowledge sharing, and the development of best practices within their respective communities. By embracing responsible AI principles, leveraging cutting-edge tools and techniques, and fostering a culture of continuous learning and improvement, organizations can unlock the full potential of these transformative technologies while mitigating risks and upholding ethical standards.

Read more on related topics: