A Journey Through Neural Network’s Types, Functions, and Uses

Author: Inza Khan

10 June, 2024

Neural networks find applications across diverse domains such as computer vision, natural language processing, robotics, healthcare, finance, and more. They are used in tasks like image recognition, speech processing, sentiment analysis, and predictive modeling.

In this blog, we’ll explore the fundamentals of neural networks and delve into the various types that exist. From basic perceptrons to sophisticated convolutional neural networks (CNNs) and recurrent neural networks (RNNs), each type serves specific purposes and excels in different applications.

What Are Neural Networks?

Neural networks, also known as artificial neural networks (ANNs), are computing systems inspired by the human brain’s functioning. They consist of interconnected nodes or neurons that communicate with each other, similar to how neurons in our brain transmit signals.

Neural networks are an important component of machine learning, a field in which computers learn from data without being explicitly programmed. Within machine learning, neural networks play a central role in deep learning, a more advanced form of learning that enables computers to recognize patterns in data without human intervention. For example, a deep learning model based on neural networks can be trained to identify objects in images it hasn’t seen before, given enough training data. Neural networks find applications in various domains, including computer vision, natural language processing, robotics, healthcare, finance, and more.

Types of Neural Networks

Perceptron

The perceptron is one of the simplest models of a neuron in a neural network. It receives input signals, processes them, and produces an output signal. The output is determined by a threshold function, which evaluates whether the weighted sum of inputs exceeds a certain threshold.

Applications:

- Logic Gates: Perceptrons can implement basic logic gates like AND, OR, and NAND gates. They are the building blocks for constructing more complex neural networks.

- Binary Classification: Perceptrons are effective for binary classification tasks where the input data needs to be divided into two categories based on a decision boundary. For example, classifying emails as spam or not spam.

Feed Forward Neural Networks (FFNN)

Feed forward neural networks are the simplest form of neural networks, where data flows in one direction, from input nodes through hidden layers to output nodes. There are no feedback connections; information moves only forward through the network.

Applications:

- Classification and Recognition: FFNNs are commonly used for classification tasks such as image recognition, speech recognition, and handwriting recognition. They analyze input data and classify it into different categories.

- Pattern Recognition: They are also used in pattern recognition tasks, such as identifying patterns in financial data or predicting trends in stock prices.

Multilayer Perceptron (MLP)

Multilayer perceptrons extend the capabilities of feed forward networks by incorporating multiple hidden layers between the input and output layers. Each layer consists of neurons connected to all neurons in the subsequent layer.

Applications:

- Classification: MLPs are widely used for classification tasks in various fields, including medical diagnosis, sentiment analysis, and fraud detection. They can handle complex data and extract meaningful features for accurate classification.

- Prediction: They are also used for predictive modeling tasks, such as forecasting sales, predicting customer behavior, and estimating financial market trends.

Convolutional Neural Network (CNN)

Convolutional neural networks are designed for processing grid-like data, such as images or video frames. They consist of convolutional layers that apply convolution operations to input data to extract features, followed by pooling layers to reduce dimensionality.

Applications:

- Image Processing: CNNs are widely used in image processing tasks, including object detection, image classification, and facial recognition. They can identify objects and patterns within images with high accuracy.

- Computer Vision: They are also used in computer vision applications, such as autonomous driving, medical image analysis, and augmented reality.

Radial Basis Function Neural Network (RBFNN)

Radial Basis Function Neural Networks classify data based on their similarity to prototypes stored during training. Each neuron computes its output based on the distance between the input data and the prototype.

Applications:

- Anomaly Detection: RBFNNs are used for anomaly detection tasks, such as detecting fraudulent transactions in financial data or identifying anomalies in network traffic.

- Pattern Recognition: They are also used in pattern recognition tasks, such as speech recognition, where they can identify patterns in audio data and classify spoken words.

Recurrent Neural Network (RNN)

Recurrent neural networks are designed to process sequential data by maintaining a memory of previous time steps. They have connections that form directed cycles, allowing information to persist over time.

Applications:

- Natural Language Processing: RNNs are widely used in natural language processing tasks, such as language translation, sentiment analysis, and text generation. They can model the sequential nature of language and generate contextually relevant responses.

- Time Series Prediction: They are also used in time series prediction tasks, such as weather forecasting, stock price prediction, and sales forecasting.

Long Short-Term Memory (LSTM) Networks

LSTM networks address the limitations of traditional RNNs by introducing special units with memory cells capable of retaining information over long sequences. They have gating mechanisms that control the flow of information, allowing them to learn and remember longer-term dependencies.

Applications:

- Language Modeling: LSTMs are used in language modeling tasks, such as text generation and speech recognition, where they can capture long-range dependencies and context.

- Sequence Prediction: They are also used in sequence prediction tasks, such as predicting the next word in a sentence or forecasting future values in a time series.

Sequence to Sequence Models

Sequence to sequence models consist of two RNNs—an encoder and a decoder—that work together to process input and output sequences of varying lengths. They are particularly useful in tasks where the length of input and output sequences may differ.

Applications:

- Machine Translation: Sequence to sequence models are widely used in machine translation tasks, such as translating text from one language to another. They can handle variable-length input and output sequences and generate accurate translations.

- Text Summarization: They are also used in text summarization tasks, where they can generate concise summaries of long documents or articles.

Modular Neural Network

Modular neural networks consist of independent modules that perform specific tasks without interacting with each other. Each module focuses on a distinct aspect of the overall task, allowing for parallel processing and efficient computation.

Applications:

- Stock Market Prediction: Modular neural networks are used in stock market prediction systems, where different modules analyze different aspects of market data, such as price movements, volume trends, and news sentiment.

- Character Recognition: They are also used in character recognition tasks, such as optical character recognition (OCR), where each module processes a different aspect of the input image, such as line detection or character segmentation.

Working of Neural Networks

Neural networks excel at learning from data, improving their performance as they encounter more examples. They learn to recognize patterns and associations between input and output, enabling them to generalize their understanding and make accurate predictions with new data. Consult our MLEs for more insights on leveraging machine learning services.

Neural Network Architecture

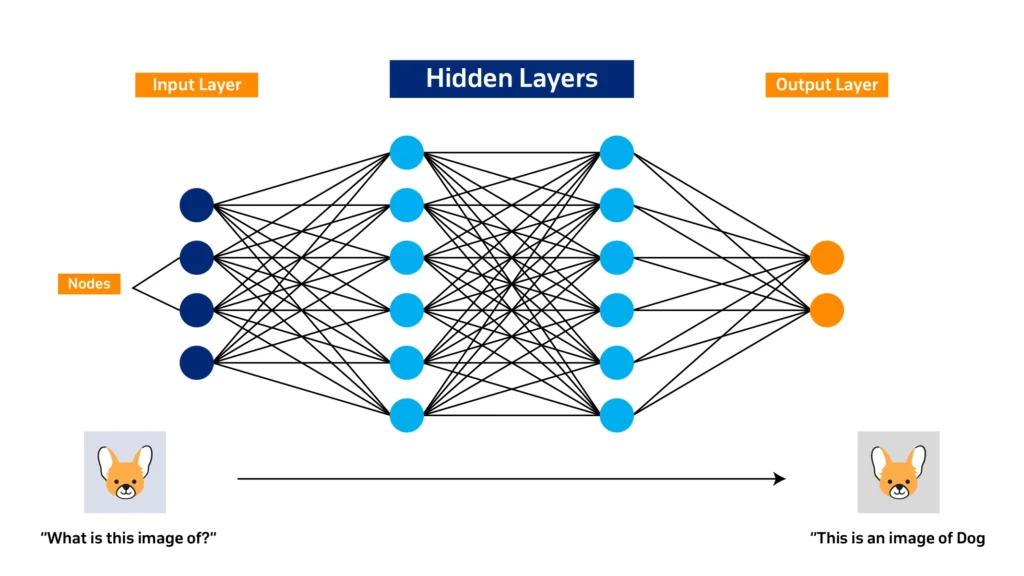

A simple neural network comprises three interconnected layers:

- Input Layer: This layer receives information from the outside world. Input nodes process the data, analyze it, and pass it on to the next layer.

- Hidden Layer: Hidden layers take input from the input layer or other hidden layers. They analyze the data further and pass it on to subsequent layers. Neural networks can have multiple hidden layers to enhance their processing capabilities.

- Output Layer: The output layer provides the final result of the data processing. It may consist of one or multiple nodes, depending on the nature of the problem. For binary classification, there is one output node, while for multi-class classification, there are multiple output nodes.

Essential Procedures of Neural Networks

Neural networks rely on four key procedures:

- Pattern Recognition: They identify patterns by associating or training on labeled data, enabling accurate classification or prediction.

- Categorization: Neural networks organize information into established categories, facilitating efficient data processing and decision-making.

- Clustering: They identify unique features within data instances, allowing them to categorize information based on intrinsic characteristics.

- Prediction: Neural networks generate anticipated outcomes using relevant input data, even when complete information is not available, enabling proactive decision-making.

Simple neural networks have few hidden layers, while deep neural networks (DNNs) have many. DNNs can process large amounts of data and extract complex patterns, making them suitable for advanced tasks.

Conclusion

Neural networks are vital in machine learning, transforming various fields by recognizing patterns and making predictions without explicit programming. From basic perceptrons to complex CNNs and RNNs, each type serves specific purposes. Perceptrons excel in binary classification, while CNNs dominate image processing tasks. RNNs are ideal for sequential data processing, and LSTM networks overcome their limitations. Sequence-to-sequence models are essential for tasks like machine translation, while modular neural networks offer efficient parallel processing. Overall, neural networks, by learning from data and improving with experience, promise a future of intelligent automation and decision support systems.