Exploring Scalability Dimensions on Microsoft Azure

Author: Tyler Faulkner

In an era where business agility is the key, the role of scalable cloud solutions is essential. Microsoft Azure provides many out-of-the-box features to ensure that modern-day applications can remain fast and functional under nearly any conditions. These robust tools allow Cloud Engineers and Architects to ensure their applications maintain agility in today’s cloud computing landscape. In this article, we will provide an overview of the key concepts of scalability in terms of the cloud and how to apply these principles in the Azure ecosystem.

Scaling Up vs. Scaling Out

Scaling up and scaling out are the two core fundamentals of scalability that have been built upon and serve as the foundation for the many scaling solutions used today. For clarity, these concepts may also be referred to as scaling vertically and scaling horizontally respectively. Scaling up is the act of provisioning better hardware for a single machine. On the flip side, scaling out is provisioning more machines rather than upgrading an existing machine. As an example, say that you are running an application that is utilizing 100% of your CPU. To scale up you would buy a better CPU. To scale out, you would buy more CPUs.

Generally, scaling up is seen as the straightforward approach of these two options. No architecture changes are required, and you can have a faster application; however, it is often more expensive to provision more performant hardware than to provision more of the same hardware compared to scaling out. The downside of scaling out is that it often requires thoughtful architecture to implement and execute effectively. On the other hand, the benefit of scaling out is often a much more cost-effective approach in the long run.

Today’s world of cloud computing is built on top of the idea that scaling out is more advantageous than scaling up; thus, platforms like Azure have many prebuilt tools that make scaling out applications simple.

The Axis of Scalability

Since most of the physical work on servers has been abstracted away through Platform as a Service offerings and Infrastructure as a Service offerings, Cloud Engineers and Architects need to focus on a new set of axes when considering scalability.

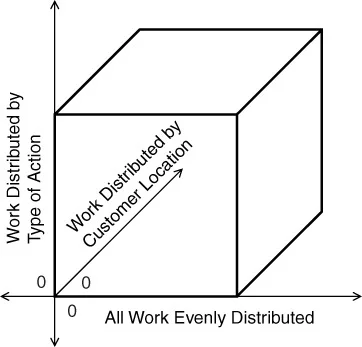

In the book The Art of Scalability (2015) by Martin Abbot and Michael Fischer, the authors describe a new model for determining scalability in an application. The following is a diagram from The Art of Scalability (Abbot, Fischer, 2015) demonstrating the new paradigm for scalability called the AKF Cube (named after their consulting firm AKF Partners):

Image Source: The Art of Scalability (Abbot, Fischer, 2015)

To fully understand these new dimensions, we will examine each axis individually:

- All work Evenly Distributed (x-axis): The principal at play here is scaling out as explained earlier. Having multiple machines running the same server and balancing work between them effectively allows an application to be more scalable along this axis.

- Work Distributed by Type of Action (y-axis): The idea here is the architecture behind your software. The more monolithic your app the closer it is to zero. Breaking apart individual actions and running them as independent microservices allows an application to scale in the y-axis.

- Work Distributed by Customer Location (z-axis): Running your services closer to your customers is key to achieving scalability on this axis. Having your app run in multiple different physical regions allows your customers to connect to your application with lower latency and higher availability.

A theoretically infinitely scalable solution would strive to maximize each axis; however, there are many restrictions (such as cost and resource allotment) that will always prevent an app from being perfectly scalable. The key takeaway from examining the AKF Cube should be an understanding of what makes an application “scalable” and applying this knowledge to current and future projects.

Azure and Scalability

With the core concepts of scalability in hand, we will now explore how these ideas can be applied and implemented on Azure. Some methods must be implemented directly, but Azure does offer some auto-scaling solutions that are baked into the infrastructure which we will discuss. Scaling up is a relatively simple process on Azure, allowing developers to easily select different instance tiers with varying amounts of CPU cores, and memory, so discussion will be light on this subject. In general, there is often a Scale section for different Azure apps which allows you to select the instance type for the deployment where each option has differing amounts of cores and memory allotments at different price tiers.

Scaling Out

In this section, we will focus on how to expand along the x-axis of the AKF Cube (“All Work Evenly Distributed”) on Azure. Since Azure offers Platform as a Service and Infrastructure as a Service each with their distinct scaling methods, we will separate them for the point of discussion.

Platform as a Service

A majority of the Platform as a Service options on Azure falls under what is called “Web Apps” which means the hardware, connections, and infrastructure running the app are completely managed by Azure. There a generally 3 methods for scaling web apps on Azure:

- Manual: The developer defines a static number of parallel instances to run the server on. Great for easily increasing availability, but it is not adaptable to varying usage in your app.

- Autoscale (rules-based): This method allows developers to create rules and schedules for when to increase/decrease the amount parallel instances available. This is beneficial to adaptability but requires developers to manually define the rules.

- Automatic Scaling (preview feature): Azure manages the rules of scaling based on the amount of HTTP traffic to the App. Developers are allowed to configure the number of always-ready instances and the amount of burst instances. The benefit is this is built into Azure and requires little effort from an engineering perspective to set up. The downside is it is much less configurable than Autoscale.

Keep in mind some of these methods are only available on certain pricing tiers, and scaling out, in general, will affect cost due to multiple instances being run in parallel. If cost is a major concern, I suggest using either manual or Autoscale to have more control over when new instances are created.

Infrastructure as a Service

For brevity, we will focus on Virtual Machines for scaling infrastructure as a service. The general idea behind scaling is similar to Platform as a Service; however, more setup and planning are required to execute due to less management from Azure. For scaling virtual machines there are parallel and identical instances running with a load balancer to evenly distribute requests between the machines. This can be manually configured using an Azure Load Balancer, but, conveniently, Azure provides a tool called Virtual Machine Scale Sets (VMSS) to do most of this setup automatically.

VMSS allows developers to create Autoscale rules in a similar way to web apps but on the infrastructure level. Rules can be set to define when to increase or decrease instances. The are 2 Orchestration modes available for VMSS for managing the parallel instance:

- Uniform: All instances use identical VM instance sizes and images. Good for stateless applications.

- Flexible: Instances in the set can all be configured individually to suit your application’s needs. Good for stateful workloads.

Using VMSS and load balancers on Azure will allow you to easily scale out your virtual machine workloads.

Microservices

Often, as the application moves up the y-axis of the AKF cube (“Work Distributed by Type of Action”), the application begins to move closer to a microservice architecture. Scaling in this direction isn’t as simple as copying instances for scaling out. Often, thoughtful consideration of architecture and system requirements is needed to break apart an application into microservices.

Keeping this in mind, it is helpful to be aware of the microservice offerings that Azure must be ready for when an application’s architecture may benefit from using them. While Azure offers many more tools to deploy microservices, here are a few of the top offerings:

- Azure Functions: Serverless functions that are triggered and run upon certain conditions such as an HTTP request or an NCRON expression for timed events. Functions can be written in JavaScript, C#, and more.

- Azure Container Apps: Deploys docker container in a serverless environment with many built-in options for scaling out.

- Azure Kubernetes Service (AKS): Manage Kubernetes clusters and containers within Azure. Good for more complex deployments.

For most microservice tools on Azure, the price model is generally pay per second of resources. The apps that benefit the most cost-wise are small and quick services that are infrequently used. If a service is used more frequently or requires long run times, costs may be less using a VM or Web App with a fixed monthly cost.

One of my favorite use cases for using Azure Functions is automated email reminder services. Many applications benefit from sending periodic email reminders which can be easily achieved by creating an Azure Function which is activated nightly from an NCRON expression.

Global Applications

One of the most difficult directions to scale, even today, is ensuring that your applications are distributed based on your customer’s location (z-axis AKF Cube) for increased availability and reduced latency. On Azure, this generally means managing and balancing an application across multiple regions. While a lot of setup and planning from a professional Cloud Engineer are required to ensure successful orchestration of multi-region apps, Azure has developed a handful of tools to assist applications aimed at a global scale. A few popular tools include:

- Azure Traffic Manager: A DNS-based global load balancer. Facilitates efficient routing to find the closest available region for each request.

- Azure Content Delivery Network (CDN): Enables efficient edge-based caching of file content to enable faster and seamless requests by bringing content closer to your users globally.

- Azure Front Door: A combined, global, and edge based CDN and traffic manager with enhanced analytic and security features. Allows caching dynamic content and routes traffic through the nearest point of presence rather than DNS servers.

Please research these tools further to learn their full feature sets and if they will help you deploy your application across regions. While global application development is a complex topic that is out of the scope of this article, as a Cloud Engineer or Architect, it is important to be aware of the technologies that exist which enable globalization of cloud applications.

Conclusion

In conclusion, achieving scalability is a fundamental aspect of modern cloud applications, and Microsoft Azure offers a robust set of tools to make this journey seamless. We explored the concepts of scaling up and scaling out, delving into the AKF cube’s axes of scalability – distribution, architecture, and customer location. From autoscaling tools for even distribution to enabling microservices and running applications across multiple regions, we also explored a comprehensive set of solutions that Azure provides.

Summary of Key Points

- Scaling up vs. Scaling out: Understanding the trade-offs, advantages, and fundamentals of scaling.

- The AKF Cube: A practical and modern model for visualizing different aspects of scalability.

- Scaling out in Azure: Methods including Automatic Scaling and Virtual Machine Scale Sets.

- Azure Microservice Solutions: Products like Function Apps and Container Apps facilitate efficient deployments on the edge.

- Global Applications on Azure: Tools like Azure Traffic Manager, Azure CDN, and Front Door for efficient multi-region deployments and increased availability.

For an in-depth analysis of scalability in apps and organizations, please read The Art of Scalability (2015) by Martin Abbot and Michael Fischer where the AKF Cube was sourced from. There is a large and in-depth repository of all the Azure tools mentioned here available on the Microsoft Learn site. I encourage you to read on these topics further and explore the tools Azure has available.