Mosaic AI Gateway in Databricks: Go From Chaos to Control

Author: Olek Drobek

13 November, 2025

The “Wild West” of Enterprise GenAI

It’s an amazing time to be in the data and AI space. The last couple of years have felt like a gold rush, with an explosion of powerful generative AI models. It hasn’t been about just one or two providers for some time now. Your teams are experimenting with everything: OpenAI’s GPT-5 for its advanced reasoning, Anthropic’s Claude Sonnet for its coding capabilities, Google’s Gemini for its long context window, and open-source models for custom tasks, cost control, and agentic workflows.

This innovation is fantastic… until you’re the one responsible for managing it.

For platform and data engineering teams, this new landscape can feel like the “Wild West.” Every new model and provider brings a new set of challenges:

- Integration Chaos: Every provider may have its own API, its own Python SDK, and its own set of credentials. Your application code becomes a complex mess of conditional logic just to handle different endpoints, and managing all the secret keys is a challenge.

- No Cost Control: How do you answer a simple question like, “How much are we spending on LLMs?” It can get complicated to track, let alone control or budget for.

- Massive Security & Compliance Risks: How do you guarantee that a developer doesn’t accidentally send a list of customer PII to an external model? How do you provide a complete audit log for your compliance team when your usage is spread across a dozen different services?

This combination of integration overhead, runaway costs, and critical security gaps is the number one blocker for enterprises trying to move from exciting GenAI proof of concepts (PoCs) to fully-governed, production-grade applications.

This is exactly the problem the Databricks Mosaic AI Gateway is built to solve. It’s the tool that tames the chaos and brings law and order to the Wild West of enterprise GenAI. In this post, we’ll cover what it is, why it’s so critical, and how you can get it running.

What is the Mosaic AI Gateway?

At its core, the Mosaic AI Gateway is a centralized proxy that sits between your applications and all your AI models.

The key concept is decoupling. Instead of your application code needing to know how to call OpenAI, Anthropic, and potentially a local open source model, it makes just one, simple call to a single Databricks endpoint. The Gateway takes it from there.

This simple-but-powerful design is what gives you all the control. The Gateway intercepts every request and applies your rules before forwarding it to the final destination.

And when they say it unifies your entire AI ecosystem, they mean all of it. As the diagram shows, the Gateway acts as a single, protected front door for:

- External Models: Think providers like OpenAI, Anthropic, Google.

- Foundation Models: State-of-the-art models provisioned and hosted directly on Databricks.

- Custom Models: Your own fine-tuned models registered in Unity Catalog

This abstraction is the foundation for everything else. By standing in the middle, the Gateway gives you a single point to enforce security, monitor costs, and ensure reliability.

From Chaos to Control: Key Features for Production

The real power of the AI Gateway comes from the features it enables by standing in the middle. It moves you from chaos to control.

First is Centralized Governance and Security. You can set fine-grained permissions and, crucially, rate limits to prevent runaway costs. The most powerful feature here is AI Guardrails. You can automatically block or mask sensitive PII before it ever leaves your network, and all activity is logged to a Unity Catalog table for a complete audit trail.

Next, you get Unified Observability and Cost Management. Because every request flows through the Gateway, all usage is logged to system tables. You can finally get one dashboard to see who is spending what, on which models. You can also enable payload logging to capture the actual prompts and responses for debugging and creating fine-tuning datasets.

Finally, it provides Production-Ready Performance and Reliability. You can seamlessly A/B test models by splitting traffic by sending 90% to your production model and 10% to a new one to compare cost and quality. You can also configure fallbacks, so if one provider’s API fails, the Gateway automatically reroutes the request to a backup, ensuring your application stays online.

How to Get Started

Note: These steps will only work as intended with a full Databricks account. Trial workspaces and the Free Edition will both run into issues with payload logging and model serving respectively.

In this example, we will create a model serving endpoint for external models, one from Google and one from OpenAI.

- Create a Model Serving Endpoint

In the Serving page, click the Create Serving Endpoint button in the top right. Fill out the name field and explore the page.

- Link Your Model and Add Your Key

We’ll start with a Google model. In Select an entity, click Foundation models and find Google Cloud Vertex AI. We’ll have to fill out a few fields for this. The first is the region that you want to access your VertexAI Project. The next is a private key from the Google Cloud Vertex AI platform. This gave me some trouble at first and you should read this explanation for how to get the string for the API key: https://kb.databricks.com/machine-learning/invalid_parameter_value-error-when-creating-a-google-vertex-ai-serving-endpoint

Then, you’ll have to add the project ID of your Google Cloud project (found in your Google Cloud console). We’ll choose Chat as the Task and select gemini-2.5-flash-lite as the Google model of choice.

We’ll do the same thing for an OpenAI model. We’ll select the OpenAI API type, add the OpenAI key (which is much more straightforward in that you just need to put the API key string in the textbox), select the Chat Task, and choose gpt-4o-mini as the model of choice.

- Enable AI Gateway Features

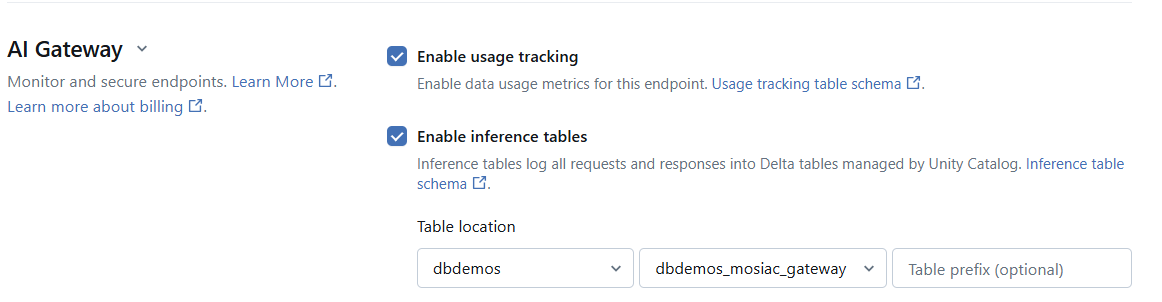

After confirming the served entities, scroll down to the AI Gateway section.

We will enable both usage tracking and inference tables for comprehensive observability of activity on our endpoint.

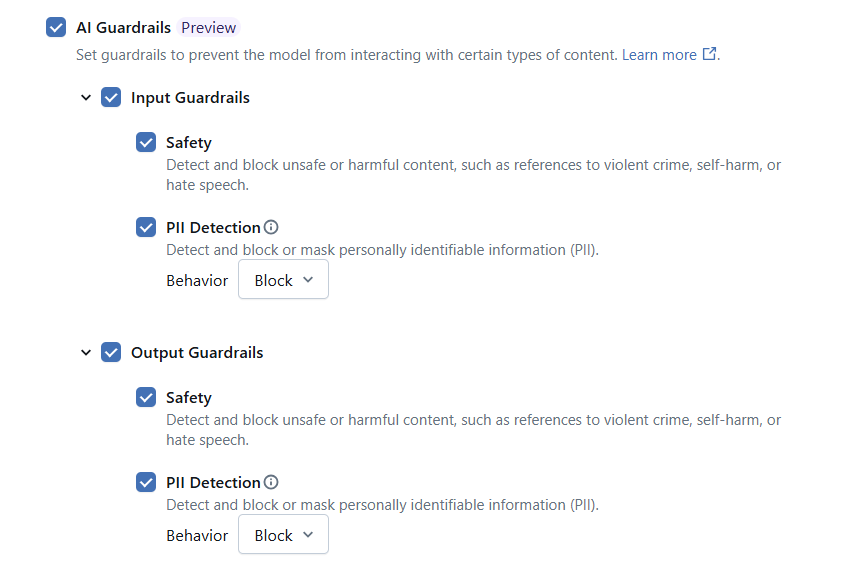

We can also optionally enable AI Guardrails, which prevent requests with unsafe or harmful content and PII (you can choose to either block or mask the data) from reaching the model at the endpoint. This separates all incoming and outgoing requests with a layer to check if requests meet certain criteria.

Finally, we can set rate limits in terms of queries per minute and tokens per minute. These granular usage limits can be set on the endpoint, or on users, groups, or service principals.

When dealing with external models in model serving endpoints on Databricks, you can enable fallbacks to automatically route failed requests to different served entities in the endpoint.

After these are all set to your specifications, you can click create and start interacting with the endpoint.

- Query the Endpoint

Click on the model serving endpoint and navigate to the Use button in the top right. You will be taken to the Playground to start interacting with the model serving endpoint with Mosaic AI Gateway features enabled.

Tips for Power Users

Once you have the basics down, you can use the AI Gateway to truly optimize your production workflows.

- Go Programmatic: While the UI is great for getting started, you should manage your production endpoints as code. You can do everything, including creating endpoints, configuring traffic splits, and setting rate limits, programmatically using the MLflow Deployments API. This allows you to integrate your endpoint management directly into your CI/CD pipelines.

- Don’t Rebuild the Wheel: Don’t spend hours building your own monitoring dashboard. Databricks provides an example AI Gateway dashboard [https://docs.databricks.com/aws/en/assets/files/ai-gateway-dashboard.lvdash-b53c450b490f540340971e4e14960781.json] that you can download and import directly. It connects to the system tables and payload tables (if enabled on the endpoint) and gives you instant visibility into costs, usage, and errors.

Conclusion: Moving from GenAI PoCs to Governed Production

The Databricks Mosaic AI Gateway is the critical bridge that takes generative AI from a “cool-but-risky PoC” to a secure, scalable, and fully-governed part of your enterprise stack.